This letter to Nature (also on arXiv) by Havlicek and coauthors deals with gaining a quantum computing advantage for classification problems and is written by quantum physicists. The main reason a friend brought this to my attention is that the classification problem is solved using support vector machines, thus fitting my recent interest in reproducing kernel Hilbert space (RKHS) methods.

The main idea is that the way numerical data is encoded into a quantum state can result in a nonlinear feature map into the very high dimensional quantum Hilbert space. Consecutive computation in the quantum Hilbert space then induces nonlinear methods in the input space. In this case nonlinear classification.

The main contributions over papers that are easier to read coming from an RKHS background (such as Quantum machine learning in feature Hilbert spaces by Schuld et al) are twofold. For one, Havlicek and coauthors use a feature map that does not result in a trivial/useless RKHS. Specifically they propose to use two layers of a diagonal gate and a Hadamard gate and conjecture that this gives a quantum advantage (while a single layer can be simulated classically). I am quite lost here of course without any background in quantum computing. Second, their classification algorithm was implemented and run on an actual quantum computer, rather than simulated on standard computers. In particular, they use five superconducting transmons, which seems to be a type of qubit implementation and allows for quantum coupling.

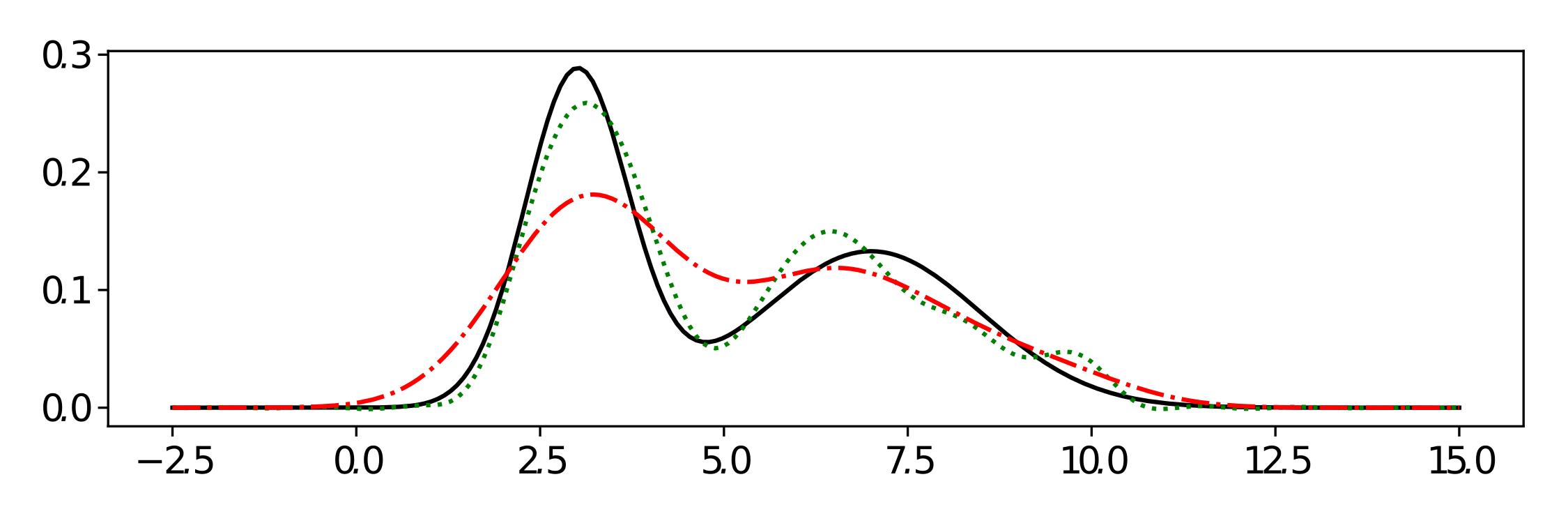

The classification problem they tackle is a toy problem that they construct so as to be perfectly separable with their classification algorithm, which is of course a good sanity check for this first step of developing actual quantum machine learning. The decision of the algorithm for any of the two classes however cannot be read from the computing device deterministically, but only stochastically. The solution, seemingly common in quantum computing, is to read out the class repeatedly to obtain samples and compute their empirical average.

The training then consists of optimizing a bias variable and a parameter that determines the feature map (unless I’m mistaken!) so as to minimize the empirical missclassification error for the training dataset

, where

is the label assigned by the algorithm and

is the actual label of

.

They use two main approaches, one working directly in the space spanned by the at input points and finding a separating hyperplane in the quantum hilbert space directly. This is what they call the variational algorithm. The other approach uses the canonical Aronszajn features, i.e. the mapping of an input point

to the function

, and then construct a separating hyperplane as a linear combination of the functions induced by the training points.

Personally, I would think that the variational approach makes much more sense in the mid to long term, as the canonical approach does not yield a more powerful method but induces large memory cost for large datasets. Then on the other hand I’m completely lost when thinking about how to invert a matrix in the quantum feature space or compute solutions of systems of linear equations.

Overall, I think that this is a very interesting path to follow and am keen on finding out how quantum computing and machine learning/statistics might combine in beneficial ways.